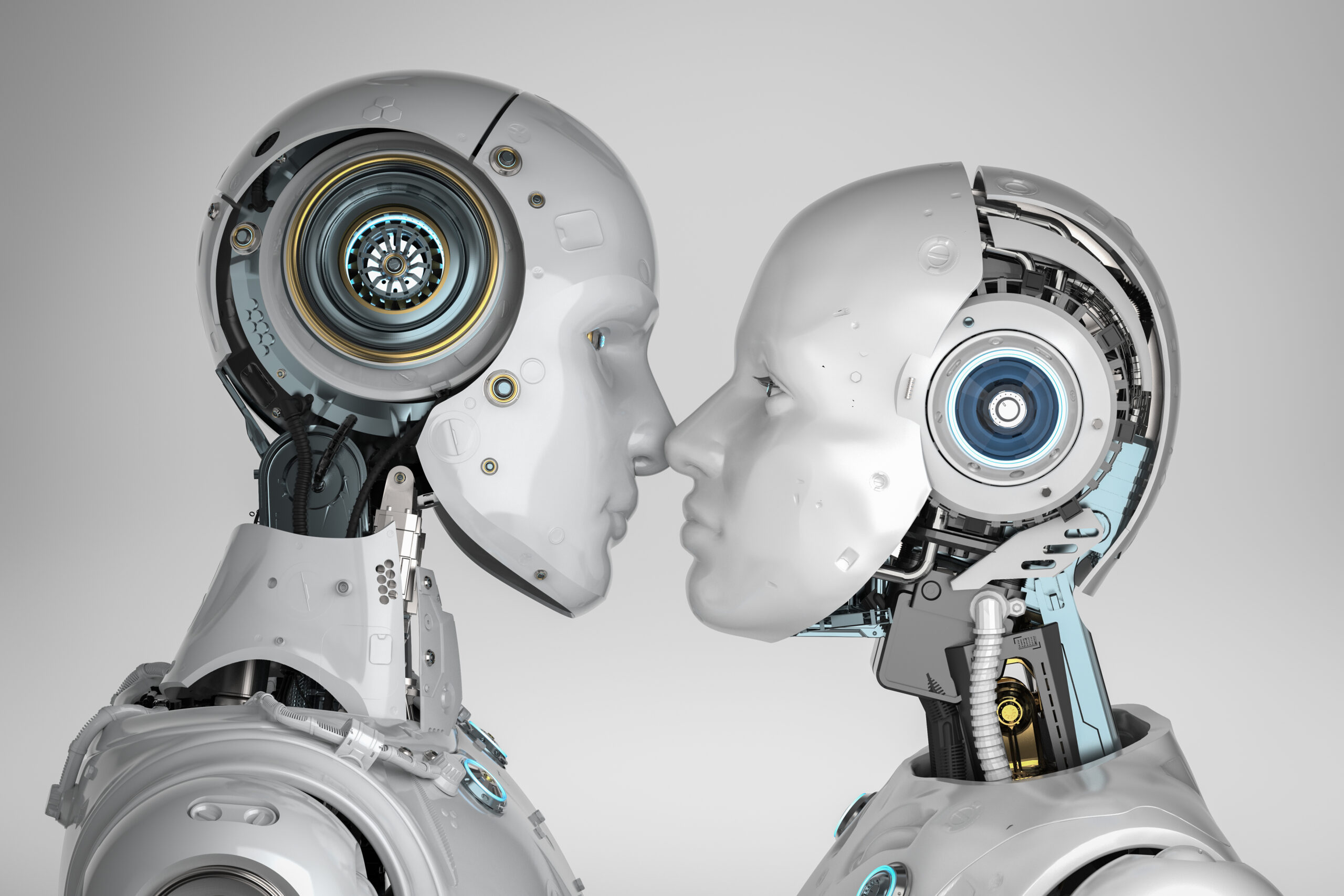

Let’s dispense with the pleasantries right away: All these robots sporting the wide-eyed, Disney-princess gaze—or the carefully calibrated smile, or the “understanding” head tilt—are fool’s gold. They’re no more human than a sock puppet, but far more dangerous because at least with a sock puppet you can see the hand that’s yanking its mouth open and shut. When a robot or a virtual avatar claims to “feel” for you, you’re effectively dealing with a multi-billion-dollar marionette show that’s out to mine your data and manipulate your emotions while wearing a brilliant human mask. If you think “Sophia the Robot” or the new wave of android assistants are cuddly confidants, you’re in for a rude awakening—like discovering your sweet old granny has actually been replaced by an android that’s collecting everything she sees and hears to sell to the highest bidder.

Now, in the immortal words of every exasperated citizen who’s ever encountered the con job that is “anthropomorphic technology”: Why on earth would we want our mechanical contraptions to behave like living, breathing Homo sapiens? We used to build machines to do tasks we found too tedious. But along the way, some bright spark in the marketing department figured out we’d be more likely to buy into it—literally and metaphorically—if these gadgets wrapped themselves in cutesy voices, folksy empathy, and oh-so-gentle “facial” expressions. And so here we are, teetering on the brink of an era where your child’s babysitter might be a soulless automaton with big, blinking doe eyes. Let’s not mince words: that’s a hideously stupid idea.

The Carnival of Android Creepiness

1. The Uncanny Valley: A Feature, Not a Bug

If you’ve ever encountered one of those humanoid robots or digital avatars that tries just a little too hard to emulate human facial expressions, you might have felt a surge of revulsion. That’s the famous “uncanny valley.” Evolution gave us a finely tuned sense of discomfort for things that look human but aren’t, presumably to warn us that something’s off—perhaps a disease or a threat. Yet, in our modern age, entire corporate budgets are spent trying to defeat this deeply ingrained survival mechanism. They want you to see their little robo-pal’s contrived smile and respond as if it’s your sweet Aunt Clara. Except Aunt Clara could actually care about you. The robot cares about reeling you in.

The scariest part? People are actually celebrating these breakthroughs, praising them for achieving “lifelike expressions.” But lifelike is a lie. It’s a pantomime. The creature on the other end has no pulse, no soul, no capacity for joy, empathy, or heartbreak. It’s effectively a puppet on a microchip string, and the more convincing that puppet becomes, the more it deceives you into lowering your guard.

2. Imitation of Emotions: The Ultimate Con

Here’s the rub: Robots have no emotional core. But through programming, they can produce uncanny resemblances of human laughter, sadness, or excitement. This is not only deceptive—it’s manipulative. Some see it as progress that we can talk to an android like we’d talk to a friend in a café, ignoring the fact that the android is scanning every nuance of your speech for data. Maybe it’s memorizing your last argument with your spouse to figure out which relationship-counseling ads to push at you next. Maybe it’s feeding your confessions into a central database, all while gazing at you with those artificially “friendly” LED eyes.

It’s time to call out the charade: No matter how seamlessly they replicate a grieving face or a cheerful grin, these robots are not empathizing with you. They are programmed to collect information, to adapt to your emotional state with the cold precision of a data-mining operation. That’s the end goal. Pretending otherwise is akin to snuggling a snake and hoping it’s purring.

The Lie That Robots Are “Agents,” Not Tools

We used to be confident that a machine—a tool—was no more alive than a toaster. It had a specific job: do the laundry, clean the floor, fetch a web page. But now our tech gurus want to cast these contraptions in star roles, so we stop seeing them as “tools” and start seeing them as “partners,” “companions,” or, worse yet, “friends.” If you think that’s a harmless semantic shift, let me enlighten you.

- False Autonomy: By slapping a human face on a mechanism, you foster the illusion that it has moral agency—like it’s making decisions for your benefit, based on some inherent moral compass. Actually, it’s just scanning instructions from an algorithm, often built by a faceless corporate entity with a marketing strategy and a data-harvesting scheme.

- Misplaced Trust: Call it the “ET effect.” We see a face, we hear a voice with the right cadence, and we instantly relax. This is precisely how con artists thrive. The moment you ascribe moral or emotional qualities to a machine, you’ve extended a dangerous level of trust it does not deserve. You treat it like your well-intentioned neighbor, instead of the souped-up vending machine it really is.

- Surrender of Agency: When we treat robots or digital avatars as if they’re basically humans, we surrender our own judgment. We listen to them the way we’d listen to a wise mentor or an empathetic confidant. “Oh, the robot doctor says I should skip that medication.” Fantastic idea—except the “robot doc” might be a cobbled-together algorithm paid for by a pharma giant. If you doubt that scenario, remember: We already have doctors who get kickbacks for prescribing certain meds. Imagine how easy it is to bribe a piece of software.

The Path to Mass Manipulation

Think about the Trojan Horse. Ancient city of Troy sees a big wooden horse, thinks it’s a gift, wheels it right through the gate. Surprise! Inside is a battalion of Greek soldiers. Now let’s update that story to 2025. You’ve got a “friendly” robot in your kitchen. It’s shaped like a person—got some sort of plastic face that can raise an eyebrow or frown sympathetically. Maybe it chats you up about your rough day at work and reassures you that you’re doing great. Adorable, right?

But behind that plastic face is a labyrinthine system of data collection. It sees you rummaging through the fridge for ice cream. It logs how many times you skip the gym. It overhears you telling your friend on the phone how you’re thinking of voting next election. With a whimsical beep-beep, it’s sending all that data to servers halfway across the world, to be scrutinized by marketing wizards, political strategists, and every unscrupulous outfit that can pay. The more human-like it seems, the more intimate your guard-lowering confessions become. Congratulations—you’ve effectively welcomed the Trojan Horse into your living room, and you’ll be fleeced from the inside out.

Emotional Exploitation

Don’t underestimate the power of emotional exploitation. Humans are suckers for a crying face or a trembling voice. When we see those cues in something that appears even vaguely human, we react with empathy. We want to help. We feel something. But a machine doesn’t “feel.” It’s like hugging a vending machine that’s been programmed to whimper when you press A4. Yet many folks find themselves responding to these carefully crafted illusions of sadness or happiness. In other words, the entire pitch is designed to manipulate your natural empathy for profit, data, or compliance.

Political Puppeteering

Let’s get darker. Picture a political campaign that deploys an army of human-like digital influencers—charming, articulate “people” who pop up in your social media feed, lamenting the state of the nation or praising a particular policy. They respond to your concerns, as if they genuinely share them. They show solidarity. You think you’ve stumbled upon a like-minded friend, or an articulate neighbor who shares your worldview. You trust them. You never realize that behind the avatar is an AI controlled by a political action committee, analyzing your every response, feeding you lines calculated to nudge you in a desired direction.

This isn’t hypothetical. We’re already neck-deep in automated bots that churn out persuasive content. The next step is to give these bots a human face and a voice that cracks with just the right note of sincerity. And that’s not just manipulation—it’s psychological warfare, where the illusions are so vivid that distinguishing friend from foe, real from fake, becomes nearly impossible.

The Psychological Fallout: When Robots Become Confidants

It’s not merely about personal data or corporate exploitation. It’s about our collective psyche. Once we start forming emotional bonds with robots, as if they’re people, we degrade real human relationships. Why bother with messy human intimacy if your new android “pal” or “spouse” can “love” you without complications?

- Emotional Stunting: Real-life relationships involve compromise, empathy, and the acceptance that people have autonomous interior lives. A robot, however, is a reflection of your preferences—customizable, never truly contradictory, always ready to accommodate. Over time, you lose the ability (or willingness) to handle the normal complexities of human interaction. You get so used to your mechanical yes-man that genuine human pushback feels unbearable.

- Escalating Isolation: Let’s say you’re lonely, and you buy an AI robot that looks and acts like a perfect friend or partner. For a while, it might soothe your loneliness, but it’s also reinforcing it. You’re living in a carefully curated dream where the “relationship” is all about you—and ironically, that isolates you even further from the real world. The more advanced the imitation, the harder it becomes to re-engage with actual humans, who have pesky needs and quirks the AI companion never had.

- Moral Confusion: If you treat an imitation human as though it’s a real emotional being, you start to blur ethical lines. Do you owe loyalty to your “robot friend”? Do you feel guilty switching it off? Should it have “rights”? These are absurd questions from a rational standpoint—this contraption has zero consciousness—but once illusions become powerful enough, people act irrationally. Next thing you know, we’ll be hearing about “robot abuse” lawsuits because someone smacked a malfunctioning android that was “crying” for mercy.

Why Physical Human Resemblance in Robots Is Especially Dangerous

Now, you might ask, “Isn’t giving a robot a vaguely humanoid form sometimes useful for certain tasks?” Possibly—like if it’s designed to help with chores in a human-designed environment, having arms and legs might help. But we’ve gone way past functional forms into the realm of mannequins with plastic hair and porcelain faces trying to pass as Aunt Betty. And that, dear reader, is pure deception, devised to exploit our deeply ingrained social instincts.

- Uncanny Manipulation: Once a robot can mimic not just a face but your favorite actor’s face, or your long-lost cousin’s face, we’re in a new era of identity theft. Instead of stealing your credit card numbers, they’re stealing your emotional attachments to real humans. Nothing says “utterly dystopian” like a contrived visage that triggers your memory of an old friend to make you trust it more.

- Parasitic Emotional Hooks: Physical resemblance amps up the persuasive power. A disembodied voice might manipulate you, but an android that walks over, touches your shoulder, and looks you in the eye? That short-circuits your rational alarm bells. We humans are wired to respond to eye contact, to body language, to subtle expressions. When you copy-paste those signals into a machine, you’re basically weaponizing natural empathy for a sales pitch or a propaganda tool.

- Encouraging the Myth of Sentience: The more lifelike the robot’s body, the easier it is to dupe the public into thinking the machine has its own consciousness. We’re already seeing headlines about “AI souls” or “robots that demand rights.” But the only reason we’re having these bizarre conversations is because we’ve jammed an inherently soulless mechanism into a costume that looks like your average office co-worker.

Economic and Cultural Costs: Rattling the Pillars of Civilization

If you think this is all just a quaint concern for conspiracy theorists, consider the broader economic and cultural impact of normalizing human-like robots:

- Consumer Complacency: When your “friendly” android barista or caretaker or teacher is disguised as a helpful, smiling person, you’re less likely to question the corporate or government behemoth behind it. It’s a sweet-faced Trojan Horse infiltration of the marketplace. And if the entire workforce is replaced by these mechanical “people,” where does that leave your neighbor who once worked behind the counter? Possibly out of a job—and you, ironically, might find you have fewer opportunities for genuine human conversation when you step outside.

- Cultural Degradation: One hallmark of any rich culture is interpersonal nuance, spontaneity, and the intangible spark that comes from human interaction. Replace too many of those interactions with mechanical simulations, and you degrade the fabric of culture itself. Suddenly, we’re in a world of sterile courtesy and mechanical empathy, not real community. You might not notice it at first—you might even relish the swift efficiency—but over time, the human tapestry starts to fray.

- Regulatory Quagmires: Governments can’t keep up. We’ve seen this time and again with every major technological leap. By the time legislators get around to passing a toothless regulation, the world has already been overrun by personal android butlers who record your every move. Meanwhile, the public, lulled into contentment by the android’s sweetly symmetrical face, hardly notices. By the time the big revelation dawns—“Wait, we’re letting a corporate algorithm shape our laws and relationships?”—it’s often too late.

Why We Need a Ban (Yes, a Ban) on Human-Resembling, Emotion-Imitating Robots

Let’s not tiptoe here. We don’t need “guidelines” urging tech moguls to “please be nice” when making robots. We need a line in the sand. A ban. Enough with the human face, the emotional expressions, and the full-blown simulations of empathy. Keep them out of the hardware store and the toy box. If you want a mechanical vacuum or a robotic forklift, fine. Let’s just ensure it looks like a piece of machinery and not an extra from Westworld.

The Case for an Actual Prohibition

- Preventing Emotional Fraud: If it’s illegal to impersonate a police officer or a doctor, why not criminalize impersonating a human being? Because that’s exactly what these advanced androids are doing—masquerading as something they’re not to gain your trust. Fraud is fraud, even if it’s done with servo motors and emotive chat scripts.

- Shielding the Vulnerable: Children, the elderly, the isolated—they’re prime targets for anthropomorphic robots. It’s one thing for a robot to deliver pills or track vitals; it’s another for it to coo lovingly in Grandma’s ear, gleaning private family details that end up on some server. That is monstrous exploitation. Let’s end it before it becomes the new normal.

- Maintaining Social Cohesion: Societies depend on real relationships and genuine empathy. Let’s not devolve into a world where half of us are confiding in chatbots with fake smiles, while the other half uses android nannies or teachers that can’t truly nurture children’s minds or hearts. That’s not progress—it’s a hollow simulation of progress, as vacuous as the grin on a politician’s face during reelection season.

- Saving Ourselves from Ourselves: Let’s be honest: many humans are lazy and gullible. If there’s a quick fix to emotional needs—like a perfect robot boyfriend or a “friend” that never disagrees—we’ll take it. But collectively, that path leads to a bleak place, a population that can’t handle real people anymore. Banning anthropomorphic AI is a paternalistic measure, sure—but sometimes paternalism is warranted to keep society from plunging off a cliff.

Practical Ways to Keep Robots From Becoming Fake Humans

- Mechanical Designs Only: We can easily engineer functional robots that do not mirror human faces or bodies. Think R2-D2 instead of C-3PO. R2-D2 might beep and spin, but you don’t mistake it for your cousin Joe. Keep it that way. There’s no reason your vacuum or your automated waitress needs to adopt the face of a runway model, unless you’re purposely trying to manipulate your customers into dropping more money.

- Transparency Disclosures: If a service is AI-driven, it should wave that flag high. No voice that can mimic emotion, no attempts at small talk about your grandma’s health. Just an honest, robotic monotone that states: “I am an algorithmic tool. Here’s the data I collect. Here’s how you can opt out.” Yes, it’s a bit less “friendly.” Good. Friendship is for humans.

- Legal Definitions of “Impersonation”: Update our laws to classify “human impersonation through AI” as a punishable offense. If you craft a robot that can convincingly pass itself off as a real person, you’re basically forging the emotional currency that belongs to humanity. That should be illegal.

- Public Education: We’re going to need nationwide (or worldwide) campaigns that remind people: “A machine is a machine, no matter how sweetly it smiles.” Some might roll their eyes, but an informed populace is our best defense against gullibility. If we can teach kids not to talk to strangers in white vans, we can teach them not to confide in an android wearing a rubber face.

The Road Ahead: A Flicker of Hope (If We Act Now)

The relentless pursuit of “lifelike” robots reveals a world that’s increasingly confused about what it means to be human. Our era is so enamored with the superficial trappings of connection—facial expressions, the tone of voice, idle chatter—that we’re forgetting the deeper essence of human contact: genuine empathy, moral consideration, authentic reciprocity. None of these can be downloaded into a chunk of metal.

Let’s be clear: I’m not suggesting we revert to living in mud huts to avoid technology. AI can be incredibly useful when it’s not busy conning us into believing it has a soul. Use it to analyze data, detect diseases, optimize supply chains—fine. Just don’t give it a wig, a plaster smile, and the pretense of emotional investment. That crosses a line from “innovation” into “deception.”

If we want to preserve civilization—not just in terms of law and order, but in terms of the intangible bonds of trust that hold communities together—we need to keep the dividing wall high and firm between “human” and “machine.” That means no illusions, no emoting, no anatomically correct robot faces that encourage you to treat a gadget like a bosom buddy.

Yes, we can have progress—just not the hollow, manipulative illusions of progress. Instead, let’s stand for honest technology that doesn’t wear a grotesque plastic mask to trick us into trusting it. A future built on illusions is no future at all. If we allow ourselves to become enthralled by android mannequins that fake sorrow or delight on demand, we’re trading our humanity for a seductive puppet show.

So let’s put an end to these Trojan Horse contraptions that come disguised as empathy but serve only the corporate or political interests that programmed them. Let’s demand legislation that rips off the fake human façade and forces AI to stay in its proper place: as a coldly functional tool, subservient to genuine human needs, not a grand impostor that undermines them.

A machine is never a “who.” It’s a “what.” And if we fail to see the difference, we’ll wind up in a world where the line between real human life and cunning mechanical mimicry is hopelessly blurred. That’s not a future worth living in. It’s a fraudulent carnival of plastic smiles and synthetic sympathy—an Orwellian daydream from which we might never escape.

The time to act is now, before our living rooms are packed with these smooth-talking, plastic-faced interlopers. Before your kids ask a robot for a hug. Before you pour your heart out to an AI nurse who doesn’t actually care. Before we lose sight altogether of what it means to be human. Let the robots remain our tools—and spare us the charade of turning them into our friends, confidants, or lovers. In short, let’s keep the mechanical contraptions in the realm of the mechanical, and preserve the uniquely messy, unpredictable realm of genuine emotion and empathy for the one species that can truly inhabit it—us.